Archive

Visualizing Probability: Roulette

I wrote a post over on the Society of Information Risk Analysts blog and I was having so much fun, I just had to continue. I focused this work on the American version of Roulette, which has “0” and “00” (European version only has “0” producing odds less in favor of the house). The American versions also have “Five Numbers” option to bet, which the European version doesn’t have.

According to this site, the American version of roulette could do about 60-100 spins in an hour, I figured maybe 4 hours in the casino and being conservative, I decided to model 250 iterations of roulette. I then chose $5 bets, which isn’t significant, changing the bet would only change the scale on the left, not the visuals produced. I then ran 20,000 simulations of 250 roulette spins and recorded the loss or gains from the bets along the way. One way to think of this is like watching 20,000 people play 250 spins of roulette and recording and plotting the outcomes.

I present this as a way to understand the probabilities of the different betting options in roulette. I leveraged the names and payout information from fastodds.com. The main graphic represents the progression of the 20,000 players through the spins. Everyone starts at zero and either goes up or down depending on lady luck. The distribution at the end shows the relative frequency of the outcomes.

Enough talking, let’s get to the pictures.

Betting on a single number

What’s interesting is the patterns forming from the slow steady march of losing money punctuated by large (35 to 1) wins. Notice there would be a few unlucky runs with no wins at all (the red line starts at zero and proceeds straight down to 1250). Also notice in the distribution on the right that just over half of the distribution occurs under zero (the horizontal line). The benefit will always go to the house.

Betting on a pair of numbers

Same type of pattern, but we see the scale changing, the highs aren’t as high and the lows aren’t as low. None of the 20,000 simulations lost the whole time.

Betting on Three Numbers

Betting on Four Number Squares

Betting on Five Numbers

Betting on Six Numbers

Betting on Dozen Numbers or a Column

Betting on Even/Odd, Red/Green, High/Low

And by this point, when we have 1 to 1 payout odds, the pattern is gone along with the extreme highs and lows.

Mixing it Up

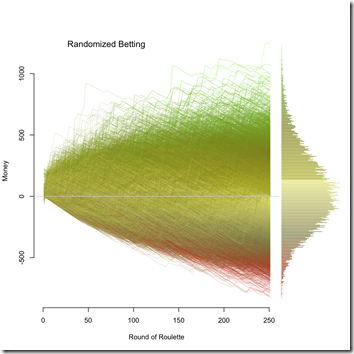

Because it is possible to simulate most any pattern of betting, I decided to try random betting. During any individual round, the bet would be on any one of the eight possible bets, all for $5. The output isn’t really that surprising.

Rolling it up into one

Pun intended. While these graphics help us understand the individual strategy, it doesn’t really help us compare between them. In order to do that I created a violin plot (the red line represents the mean across the strategies).

Looking at the red line, they all have about the same mean with the exception of Five Numbers (6-1). Meaning overtime, the gambler should average to just over a 5% loss (or a 7% loss with five number bets). We can see that larger odds stretch the range out, which smaller odds cluster much more around a slight loss. The “scatter” strategy does not improve the outcome and is just a combination of the other distributions. As mentioned, the 6-1 odds (Five Numbers) bet does stick out here as a slightly worse bet than the others.

Lastly, I want to turn back to a comment on the fastodds.com website:

While I may disagree that the only bets to avoid are limited to those (had to get that in), I also disagree with the blanket statement. Since they all lose more often than they win, trying to get less-sucky-odds seems a bit, well, counter-intuitive. I would argue that the bets to avoid are not the same for every gambler. The bets should align with the tolerance of the gambler. For example, if someone is risk-averse, staying with the 2-1 or 1-1 payouts would limit the exposure to loss, while those more risk-seeking, may go for the 17-1 or 35-1 payout – the bigger the risk, the bigger the reward. Another thing to consider is that the smaller odds win more often. If the thrill of winning is important, perhaps staying away from the bigger odds is a good strategy.

Now that you’re armed with this information, if you still have questions, the Roulette Guru is available to advise based on his years of experience.

AES on the iPhone isn’t broken by Default

I wanted to title this “CBC mode in the AES implementation on the iPhone isn’t as effective as it could be” but that was a bit too long. Bob Rudis forwarded this post, “AES on the iPhone is broken by Default” to me via twitter this morning and I wanted to write up something quick on it because I responded “premise is faulty in that write up” and this ain’t gonna fit in 140 characters. Here is the premise I’m talking about:

In order for CBC mode to perform securely, the IV must remain impossible for the attacker to derive or predict.

This isn’t correct. In order for CBC mode to be effective the initialization vector (IV) should be unique (i.e. random), preferably per individual use. To correct the statement: In order for AES to perform securely, the key must remain impossible for the attacker to derive or predict. There is nothing in the post that makes the claim that the key is exposed or somehow able to be derived or predicted.

Here’s the thing about IV’s: they are not secret. If there is a requirement to keep an IV secret, the cryptosystem is either designed wrong or has some funky restrictions and I’ll be honest, I’ve seen both. In fact, the IV is so not secret, the IV can be passed in the clear (unprotected) along with the encrypted message and it does not weaken the implementation. Along those lines, there are critical cryptosystems in use that don’t use IV’s. For example, some financial systems leverage ECB mode which doesn’t use an IV (and has it’s own problems). Even a bad implementation of CBC is better than ECB. Keep that in mind because apparently ECB is good enough for much of the worlds lifeblood.

So what’s the real damage here? As I said in order for CBC to be effective, the IV should not be reused. If it is reused (as it appears to be on the implementation in the iPhone Nadim wrote about), we get a case where an attacker may start to pull out patterns from the first block. Which means if the first block contains repeatable patterns across multiple messages, it may be possible to detect that repetition and infer some type of meaning. For example, if the message started out with the name of the sender, a pattern could emerge (across multiple encrypted messages using the same key and IV) in the first block that may enable some inference as to the sender on that particular message.

Overall, I think the claim of “AES is broken on the iPhone” is a bit overblown, but it’s up to the interpretation of “broken”. If I were to rate this finding on a risk scale from “meh” to “sky is falling”, off the cuff I’d say it was more towards “meh”. I’d appreciate this fixed from apple at some point… that is, if they get around to it and can squeeze it in so it doesn’t affect when I can get an iPhone 5… I’d totally apply that patch. But I certainly wouldn’t chuck my phone in the river over this.

A Call to Arms: It is Time to Learn Like Experts

I had an article published in the November issue of the ISSA journal by the same name as this blog post. I’ve got permission to post it to a personal webpage, so it is now available here.

The article begins with a quote:

When we take action on the basis of an [untested] belief, we destroy the chance to discover whether that belief is appropriate. – Robin M. Hogarth

That quote from his book, “Educating Intuition” and it really caught the essence of what I see as the struggles in information security. We are making security decisions based on what we believe and then we move onto the Next Big Thing without seeking adequate feedback. This article is an attempt to say that whatever you think of the “quant” side of information security needs to be compared to the what we have without quants – which is an intuitive approach. What I’ve found in preparing for this article is that the environment we work in is not conducive to developing a trustworthy intuition on its own. As a result, we have justification in challenging unaided opinion when it comes to risk-based decisions and we should be building feedback loops into our environment.

Have a read. And by all means, feedback is not only sought, it is required.

Risk Analysis is a Voyage

OWASP Risk Rating Methodology. If you haven’t read about this methodology, I highly encourage that you do. There is a lot of material there to talk and think about.

To be completely honest, my first reaction is “what the fudge-cake is this crud?” It symbolizes most every challenge I think we face with information security risk analysis methods. However, my pragmatic side steps in and tries to answer a simple question, “Is it helpful?” Because the one thing I know for certain is the value of risk analysis is relative and on a continuum ranging from really harmful to really helpful. Compared to unaided opinion, this method may provide a better result and should be leveraged. Compared to anything else from current (non-infosec) literature and experts, this method is sucking on crayons in the corner. But the truth is, I don’t know if this method is helpful or not. Even if I did have an answer I’d probably be wrong since its value is relative to the other tools and resources available in any specific situation.

But here’s another reason I struggle, risk analysis isn’t easy. I’ve been researching risk analysis methods for years now and I feel like I’m just beginning to scratch the surface – the more I learn, the more I learn I don’t know. It seems that trying to make a “one-size fits all” approach always falls short of expectations, perhaps this point is better made by David Vose:

I’ve done my best to reverse the tendency to be formulaic. My argument is that in 19 years we have never done the same risk analysis twice: every one has its individual peculiarities. Yet the tendency seems to be the reverse: I trained over a hundred consultants in one of the big four management consultancy firms in business risk modeling techniques, and they decided that, to ensure that they could maintain consistency, they would keep it simple and essentially fill in a template of three-point estimates with some correlation. I can see their point – if every risk analyst developed a fancy and highly individual model it would be impossible to ensure any quality standard. The problem is, of course, that the standard they will maintain is very low. Risk analysis should not be a packaged commodity but a voyage of reasoned thinking leading to the best possible decision at the time.

-David Vose, “Risk Analysis: A Quantitative Guide”

So here’s the question I’m thinking about, without requiring every developer or infosec practitioner to become experts in analytic techniques, how can we raise the quality of risk-informed decisions?

Let’s think of the OWASP Risk Rating Methodology as a model, because, well, it is a model. Next, let’s consider the famous George Box quote, “All models are wrong, but some models are useful.” All models have to simplify reality at some level (thus never perfectly represent reality) so I don’t want to simply tear apart this risk analysis model because I can point out how it’s wrong. Anyone with a background in statistics or analytics can point out the flaws. What I want to understand is how useful the model is, and perhaps in doing that, we can start to determine a path to make this type of formulaic risk analysis more useful.

Risk Analysis is a voyage, let’s get going.

Yay! We Have Value, Part 2

There are very few things more valuable to me than someone constructively challenging my thoughts. I have no illusions thinking I’m right and I’m fully aware that there is always room for improvement in everything. That’s why I’m excited that lonervamp wrote up “embrace the value, any value, you can find” providing some interesting challenges to my previous post on “Yay! we have value now!”

Overall, I’d like to think we’re more in agreement than not, but I was struck by this quote:

Truly, we will actually never get anywhere if we don’t get business leaders to say, "We were wrong," or "We need guidance." These are the same results as, "I told ya so," but a little more positive, if you ask me. But if leaders aren’t going to ever admit this, then we’re not going to get a chance to be better, so I’d say let ’em fall over.

Crazy thought here… What if they aren’t wrong? What if security folks are wrong? I’m not going to back that up with anything yet. But just stop and think for a moment, what if the decision makers have a better grasp on expected loss from security breaches than security people? What would that situation look like? What data would we expect to find to make them right and security people wrong? Why do some security people find some pleasure when large breaches occur? Stop and picture those for a while.

I don’t think anyone would say it’s that black and white and I don’t think there is a clear right or wrong here, but I thought I’d attempt to shift perspectives there, see if we could try on someone else’s shoes. I tend to think that hands down, security people can describe the failings of security way better than any business person. However, and this is important, that’s not what matters to the business. I know that may be a bit counter-intuitive, our computer systems are compromised by the bits and bytes. The people with the best understanding of those are the security people, how can they not be completely right in defining what’s important? I’m not sure I can explain it, but that mentality is represented in the post that started this discussion. This sounds odd, but perhaps security practitioners know too much. Ask any security professional to identify al the ways the company could be shut down by attackers and it’d probably be hard to get them to stop. Now figure out how many companies have experienced losses anything close to those and we’ve got a very, very short list. That is probably the disconnect.

Let me try and rephrase that, while security people are shouting that our windows are susceptible to bricks being thrown by anyone with an arm (which is true), leaders are looking at how often bricks are thrown and the expected loss from it (which isn’t equal to the shouting and also true). That disconnect makes security people lose credibility (“it’s partly cloudy, why are they saying there’s a tornado?”) and vice versa (“But Sony!”). I go back to neither side is entirely wrong, but we can’t be asking leadership to admit they’re wrong without some serious introspection first.

I’d like to clarify my point #3 too. Ask the question: how many hack-worthy targets are there? Whether explicit or not, everyone has answered this in there head, most everyone is probably off (including me). When we see poster children like RSA, Sony, HBGary and so on. We have to ask ourselves how likely is it that we are next? There are a bazillion variables in that question, but let’s just consider it as a random event (which is false, but the exercise offers some perspective). First, we have to picture “out of how many?” Definitely not more than 200 Million (registered domain names), and given there are 5 Million U.S. companies (1.1 Million making over 1M, 7,500 making over 250M), can we take a stab at how many hack-worthy targets there are in the world? 10 thousand? Half a million? Whatever that figure is, compare it to the number of seriously impactful breaches in a year. 1? 5? 20? 30? Whatever you estimate here, it’s a small, tiny number. Let’s take worst case of 30/7,500 (max breaches over min hack-worthy) that comes out to a 1 in 250 chance. That’s about the same chance a white person in the US will die of myeloma or that a U.S. female will die of brain cancer. It might even be safe to say that in any company, female employees will die of brain cancer more often than a major/impactful security breach will occur. Weird thought, but that’s the fun of reference data points and quick calculations.

This is totally back-of-the-napkin stuff, but people do these calculations without reference data and in their head. Generally people are way off on these estimations. It’s partly why we think Sony is more applicable than it probably is (and why people buy lottery tickets). The analogy LonerVamp made about the break-ins in the neighborhood doesn’t really work, it puts the denominator too small in our heads. Neighborhoods are pictured, I’d guess as a few dozen, maybe 100 homes max, and makes us think we’re much more likely to be the next target. Perhaps we could say, “imagine you live in a neighborhood of 10,000 houses and one of them was broken into…” (or whatever the estimate of hack-worthy targets is).

I bet there’s an interesting statistic in there, that 63% percent of companies think they are in the top quarter of prime hack-worthy targets. (yeah, made that up, perhaps there’s some variation of the Dunning-Kruger effect for illusory hack-worthiness). Anyway, I’m cutting the rest of my points for the sake of readability. I’d love to continue this discussion and I hope I didn’t insult lonervamp (or anyone else) in this discussion, that isn’t my intent. I’m trying to state my view of the world and hope that others can point me in whatever direction makes more sense.

Yay! We Have Value Now!

I haven’t written in a while, but I was moved to bang on the keyboard by a post over at Risky Biz. I don’t want to pick on the author, he’s expressing an opinion held by many security  people. What I do want to talk about is the thinking behind “Why we secretly love LulzSec”. Because this type of thinking is, I have to say it: sophomoric.

people. What I do want to talk about is the thinking behind “Why we secretly love LulzSec”. Because this type of thinking is, I have to say it: sophomoric.

Problem #1: It assumes there is some golden level of “secure enough” that everyone should aspire too. If a company doesn’t put a moat with some type of flesh eating animal in it, they’re a bunch of idiots and they deserve to be bullrushed because it’s risky to not have a moat, right? Wrong, this type of thinking kills credibility and diminishes the influence infosec can have on the business (basically this thinking turns otherwise smart people into whiners). The result is that the good ideas of security people are dismissed and little-or-no progress is made which leads to…

Problem #2: Implies that security people know the business better than the business leaders. Maybe this is caused by an availability bias but some of the most inconsistent and irrational ranting I have seen, have come from information security professionals. I haven’t seen anyone else make a fervent pitch for (what is seen as obvious) change and walk out rejected and have no idea why. This is closely related to the first problem – this thinking implies that information security is an absolute and whatever the goals and objectives are for the company, they should all still want to be secure. That just isn’t reality. Risk tolerance is relative, multi-faceted, usually in a specific context and really hard to communicate. I think @ristical said it best (and I’m paraphrasing) with “leadership doesn’t care about *your* risk tolerance”.

Problem #3: This won’t change most people’s opinion of the role of corporate information security. Saying “I told you so” will put you back into problem #2. It’s simple numbers. We’re pushing 200 Million domain names, the U.S. has over 5 million companies and we’re going to see a record, what, 15-20 large breaches this year? Odds are pretty good, whatever company we’re working at won’t be a victim this year. There are some flaws in this point here (and exploring these flaws is where I think we can make improvements), but this is the perception of decisions makers, and that brings us to the final problem with this thinking. We need more tangible proof to really believe in hard-to-fix things like global warming: we fix broken stuff when the pain of not fixing something hurts more than fixing something. And let’s be honest, in the modern complex network of complex systems, fixing security is deceptively hard, it’s going to have to hurt a lot for the current needle to be moved, the entire I.T. industry is built on our high tolerance for risk and most companies just aren’t seeing that level of comparable pain.

Problem #4: Companies are as insecure as they can be (hat tip to Marcus Ranum who I believe said this about the internet). To restate that, we’re not broken enough to change. Despite all the deficiencies in infosec and the ease with which companies can fall to script kiddies (who are now armed to the teeth), we are still functioning, we are still in business. Don’t get me wrong, the amount of resources devoted to infosec has increased exponentially in the last 15 years. Companies care about information security, but in proportion to the other types of risks they are facing as well.

Are companies blatantly vulnerable to attacks? Hellz ya. Do I secretly love LulzSec? Hellz No (aside from the joy of watching a train wreck unfold and some witty banter). I don’t see the huge momentum in information security being shifted by a “told ya so” mentality. I only see meaningful change through visibility, metrics and analysis and even then only from within the system. Yes, companies may be technically raped in short order, but that doesn’t mean previous security decisions were bad. We didn’t necessarily make a bad decisions building a house just because a tornado tore it down. Let’s keep perspective here. Whether or not Sony put on a red dress and walked around like a whore doesn’t make them any less of a victim of rape and the attackers any less like criminals and security professionals should be asking why there is a difference in risk tolerance rather than saying “I told you so.”

7 Steps to Risk Management Payout

I was thinking about the plethora of absolutely crappy risk management methods out there and the commonalities they all end up sharing. I thought I’d help anyone wanting to either a) develop their own internal methodology or b) get paid for telling others how to do risk management. For them, I have created the following approach which enables people to avoid actually learning about information risk, uncertainty, decision science, cognitive biases or anything else usually important in creating and performing risk analysis.

The beauty of this approach is that it’s foundational. When people realize that it’s not actually helpful, it’s possible to build a new (looking) process by mixing up the terms, categories and breakdowns. While avoiding learning, people can stay in their comfort zone and do the same approach over and over in new ways each time. Everyone will be glad for the improvements until those don’t work out, then the re-inventing can occur all over again following this approach.

Here we go, the 7 steps to a risk management payout:

Step 1: Identify something to assess.

Be it an asset, system, process or application. This is a good area to allow permutations in future generations of this method by creating taxonomies and overly-simplified relationships between these items.

Step 2: Take a reductionist approach

Reduce the item under assessment into an incomplete list of controls from an external source. Ignore the concept of strong emergence because it’s both too hard to explain and too hard for most anyone else to understand let alone think is real. Note: the list of controls must be from an external source because they’re boring as all get-out to create from scratch and it gives the auditor/assessor an area to tweak in future iterations as well. Plus, if this is ever challenged, it’s always possible to blame the external list of controls as being deficient.

Step 3: Audit Assess

Get a list of findings from the list of controls, but call them “risk items”. In future iterations it’s possible to change up that term or even to create something called a “balanced scorecard”, doesn’t matter what that is, just make something up that looks different than previous iterations and go on. Now it’s time for the real secret sauce.

Step 4: Categorize and Score (analyze)

Identify a list of categories on which to assess the findings and score each finding based on the category, either High/Medium/Low or 1-5 or something else completely irrelevant. I suggest the following two top-level categories as a base because it seems to captures what everyone is thinking anyway:

- A score based on the worst possible case that may occur, label this “impact” or “consequence” or something. If it’s possible to bankrupt the entire company rate it high, rate it higher if it’s possible to create a really sensational chain of events that leads up to the worst-case scenario. It helps if people can picture it. Keep in mind that it’s not helpful to get caught up in probability or frequency, people will think they are being tricked with pseudo-science.

- A score based on media coverage and label this “likelihood” or “threat”. The more breaches in the media that can be named, the higher the score. In this category, it helps to tie the particular finding to the breach, even if it’s entirely speculative.

Step 5: Fake the science

Multiply, add or create a look up table. If a table is used, be sure to make it in color with scary stuff being red and remember there is no green color in risk. If arithmetic is used, future variation could include weights or further breaking down the impact/likelihood categories. Note: Don’t get tangled up with proper math at this point, just keep making stuff up, it’s gotten us this far.

Step 6: Think Dashboard

Create categories from the output scores. It’s not important that it be accurate. Just make sure the categories are described with a lot of words. The more words that can be tossed at this section, the less likely people will be to read the whole thing, making them less likely to challenge it. Remember not to confuse decision makers with too many data points. After all they got to where they are because they’re all idiots, right?

Step 7: Go back and add credibility

One last step. Go back and put acronyms into the risk management process being created. It’s helpful to know what these acronyms mean, but don’t worry about what they represent, nobody else really knows either so nobody will challenge it. On the off chance someone does know these, just say it was more inspirational that prescriptive. By combining two or more of these, the process won’t have to look like any of them. Here’s a couple of good things to cite as feeding this process:

- ISO-31000, nobody can argue with international standards

- COBIT or anything even loosely tied to ISACA, they’re all certified, and no, it doesn’t matter that COBIT is more governance framework

- AS/NZ 4360:2004, just know it’s from Australia/New Zealand

- NIST-SP800-30 and 39, use them interchangeably

- And finally, FAIR because all the cool kids talk about it and it’s street cred

And there ya have it, 7 steps to a successful Risk Management Methodology. Let me know how these work out and what else can be modified so that all future promising young risk analysis upstarts can create a risk analysis approach without being confused by having the learn new things. The real beauty here is that people can do this simple approach with whatever irrelevant background they happen to have. Happy risking!

Defining Risk

There are about as many definitions of risk as people you can ask and I’ve spent far too much energy pursuing this elusive definition but I think I can say, I’ve reached a good place. After all my reading, pontifications and discussions I feel that I am ready to answer the deceptively simple question “how do you define risk?” with this very simple answer:

I don’t know.

Oh I can toss things out there like “the probable frequency and probable magnitude of future loss” from the FAIR methodology. I could also wax philosophically about how I *mostly* agree with Douglas Hubbard’s well developed definition of “A state of uncertainty where some of the possibilities involve a loss” (note: I *mostly* agree just to pretend that I know something Mr. Hubbard doesn’t).

But if I don’t know, how can I say that I’ve reached a good place pursuing a risk definition? Because I have accepted the ambiguity and I’ve realized that terminology and definitions exist simply to help communicate concepts or ideas. That’s where we should be spending our efforts, behind the definitions. In that light, I have come to believe that definitions don’t have to be 100% right, they simply have to be helpful. Take the definition of risk from ISO 31000: “the effect of uncertainty on objectives”. That sounds cool, even after thinking about it for a while, but when it comes to being helpful? Nope, not even close. I may have an objective of defining risk and I’m immersed in uncertainty but I wouldn’t call the effect of that uncertainty “risk”. If anything, that definition leaves me more confused than when I started.

There’s some good news though, problems in defining central terms isn’t unique to risk. Take this from Melanie Mitchell:

In 2004 I organized a panel discussion on complexity at the Santa Fe Institute’s annual Complex Systems Summer School. It was a special year: 2004 marked the twentieth anniversary of the founding of the institute. The panel consisted of some of the most prominent members of the SFI faculty…all well-known scientists in fields such as physics, computer science, biology, economics and decision theory. The students at the school…were given the opportunity to ask any question of the panel. The first question was, “How do you define complexity?” Everyone on the panel laughed, because the question was at once so straightforward, so expected, and yet so difficult to answer.

She goes on in her book to say “Isaac Newton did not have a good definition of force” and “geneticists still do not agree on precisely what the term gene refers to at the molecular level.”

I take comfort in these stories, we are not unique, we are not alone.

As we move forward in the pursuit of information risk, let’s stay focused on where the real work should be done: measuring and communicating risk. Let’s put a little less effort on defining it just yet. Don’t’ get me wrong, definitions are helpful, but let’s not get all wrapped up in the precision of words when we’re still struggling with the concepts they are describing.

What are we fighting for?

I’ve written about this topic at least a half dozen times now, I’ve saved each one as a draft and I’m giving up – I’m asking for help. I was inspired to do this by a video “Where Good Ideas Come From” (Bob Blakely posted the link in twitter). I can’t find the answer to this puzzle, at least not in any meaningful way, not by myself. I’ll break down where I am in the thought process and hope that I get some feedback. (note: For the purpose of this discussion I’m using “security” as a group of people and technology intended to protect assets.)

Business

The goal of business is pretty well understood. For-profit companies are after profit and all the things that would affect that, reputation and customer confidence being on the top of the list for information security. From a business perspective, what I think is considered a successful security program is spending just enough on security and not too much. Spending too much on security should not be considered a success as well as failed security (though not equally). The goal isn’t perfect security, the goal is managed security. There is a point of diminishing returns in that spending, at some point there is just enough security.

I think of a production line manufacturing some physical widget. While it’d be really cool to have zero defects, most businesses spend just enough to make the product defects within some tolerance level. Translating to infosec, the goal from a business perspective is to spend enough (on security) to meet some level of business risk tolerance. That opens up a whole different discussion that I’ll avoid for now. But my point is that there should be a holistic view to information security. Since the goal of protecting information is only one variable to reach the goal of being profitable – there could easily be a good decision to increase spending and training for public relations staff to respond to any breach rather than preventing a specific subset of breaches themselves. Having the goal in mind enables those types of flexible trade offs.

InfoSec

Most every infosec talk I go to the goal appears to be security for the sake of security. In other words, the goal is to have not have security fail. The result is that the focus is shifted onto prevention and statements of risk stop short of being meaningful. “If X and Y happen an attacker will have an account on host Z.” is a statement on security, not risk. It’s a statement of action with impact to security not an impact to the broader goal. This type of focus devalues detective controls in the overall risk/value statement (everyone creates a mental measurement of risk/value in their own head). Once a detective control like logging is triggered in a breach, the security breach has occurred. The gap is in that the real reason we’re fighting—the bigger picture—the goal, hasn’t yet been impacted. However, and this is important, because the risk is perceived from a security perspective, emphasis and priorities are often misplaced. Hence, the question in the title. I don’t think we should be fighting for good security, we should be fighting for good-enough security.

Government

I think this may be a special case where the goal is in fact security, but I have very little experience here. I won’t waste time pontificating on the goals for government. But this type of thing factors into the discussion. If infosec in government has a different goal then private enterprises, where are the differences and similarities?

Compliance

The simple statement of “Compliance != Security” implies that the goal is security. What are we fighting for? It becomes pretty clear why some of the compliant yet “bad” security decisions were made if we consider that the goal wasn’t security. Compliance is a business concern, the correlation to infosec is both a blessing and curse.

Where am I heading?

So I’m seeing two major gaps as I type this. First thing is I don’t think there is any type of consensus around what our goal is in information security. My current thought is that perfect security is not the goal and that security is just a means to some other end. I think we should be focusing on where that end is and how we define “just enough” security in order to meet that. But please, help me understand that.

Second thing is the problem this causes, the “so what” of this post. We lack the ability to communicate security and consequently risk because we’re talking apples and oranges. I’ve been there, I’ve laid out a clear and logical case why some security thingamabob would improve security only to get some lame answer as to why I was shot down. I get that now. I wasn’t headed in the same direction as others in the conversation. The solution went towards my goal of security, not our goal of business. Once we’re all headed towards the same goals we can align assumptions and start to have more productive discussions.

For those who’ve watched that video I linked to in the opening, I’ve got half an idea. It’s been percolating for a long time and I can’t seem to find the trigger that unifies this mess. I’m putting this out there to hopefully trigger a response – a “here’s what I’m fighting for” type response. Because I think we’ve been heading in a dangerous direction focusing on security for the sake of security.

A Matter of Probability

I was reading an article in Information Week on some scary security thing, and I got to the one and only comment on the post:

Most Individuals and Orgs Enjoy "Security" as a Matter of Luck

Comment by janice33rpm Nov 16, 2010, 13:24 PM EST

I know the perception, there are so many opportunities to well, improve our security, that people think it’s a miracle that a TJX style breach hasn’t occurred to them a hundred times over and it’s only a matter of  time. But the breech data paints a different story than “luck”.

time. But the breech data paints a different story than “luck”.

As I thought about it, that word “luck” got stuck in my brain like some bad 80’s tune mentioned on twitter. I started to question, what did “lucky” really mean? People who win while gambling could be “lucky”, lottery winners are certainly “lucky”. Let’s assume that lucky then means beating the odds for some favorable outcome, and unlucky means unfavorable, but still defying the odds. If my definition is correct then the statement in the comment is a paradox. “Most” of anything cannot be lucky, if most people who played poker won then it wouldn’t be lucky to win, it would just be unlucky to lose. But I digress.

I wanted to understand just how “lucky” or “unlucky” companies are as far as security, so I did some research. According to Wolfram Alpha there are just over 23 Million businesses in the 50 United States and I consider being listed in something like datalossDB.org would indicate a measurement of “not enjoying security” (security fail). Using three years from 2007-2009, I pulled the number of unique businesses from the year-end reports on datalossDB.org (321, 431 and 251). Which means that a registered US company has about a 1 in 68,000 chance of ending up on datalossDB.org. I would not call those not listed as “lucky”, that would be like saying someone is “lucky” if they don’t get a straight flush dealt to them in 5-card poker (1 in 65,000 chance of that)

But this didn’t sit right with me. That was a whole lot of companies and most of them could just be a company on paper and not be on the internet. I turned to the IRS tax stats, they showed that in 2007, 5.8 million companies filed returns. Of those about 1 million listed zero assets, meaning they are probably not on the internet in any measurable way. Now we have a much more realistic number, 4,852,748 businesses in 2007 listed some assets to the IRS. If we assume that all the companies in dataloss DB file a return, that there is a 1 in 14,471 chance for a US company to suffer a PII breach in a year (and be listed in the dataloss DB).

Let’s put this in perspective, based on the odds in a year of a US company with assets appearing on dataloss DB being 1 in 14,471:

- If you are female, it is more likely that you’ll die in a transportation accident in a year. (1 in 10,170)

- It is more likely that a person will visit an emergency department due to an accident involving pens or pencils (1 in 13,300)

- (my favorite) It is more likely that a person will visit an emergency department due to an accident involving a grooming device (1 in 10,200)

Aside from being really curious what constitutes as a grooming device, I didn’t want to stop there, so let’s remove a major chunk of companies whose reported assets were under $500,000. 3.8 million companies listed less then $500k in their returns to the IRS in 2007, so that leaves 982,123 companies in the US with assets over $500k. I am just going to assume that those “small” companies aren’t showing in the dataloss stats.

Based on being a US Company with over $500,000 in assets and appearing in dataloss DB at least once (1 in 2,928):

- It is more likely that a person will visit an emergency department due to an accident involving home power tools or saws (1 in 2,795)

- It is more likely that a Hispanic female 12 or older will be the victim of a purse-snatching or pickpocketing (1 in 2,500)

- And finally, is is more likely that a person 6 or older will participate in a non-traditional triathlon in a year (1 in 2,912)

Therefore, I think it’s paradoxically safe to say:

Most Individuals do not participate in a non-traditional triathlon as a Matter of Luck.

Truth is, it all goes down to probability, specifically the probability of a targeted threat event occurring. In spite of that threat event being driven by an adaptive adversary, the actions of people occur with some measurable frequency. The examples here are pretty good at explaining this point. Crimes are committed by adaptive adversaries as well, and we can see that about one out of every 2,500 Hispanic females 12 or older, will experience a loss event from purse-snatching or pickpocketing per year. In spite of being able to make conscious decisions, those adversaries commit these actions with astonishing predictability. Let’s face it, while there appears to be randomness on why everyone hasn’t has been pwned to the bone, the truth is in the numbers and it’s all about understanding the probability.