Archive

The Simple Power of OpenPERT: ALE 2.0

Yup, Chris Hayes (and I) have released the 1.0 version of OpenPERT. I had a sneaking suspicion that most people would do what I did with my first excel add-in. “Okay, I installed it, now what?” then perhaps skim some document on it, type in a few formulas and walk away thinking about lunch or something. In an attempt to minimize OpenPERT being *that* add-in, we created something to play with – the next generation of the infamous ALE model. We call in “ALE 2.0”

About ALE

ALE stands for Annualized Loss Expectancy and it is taught (or was taught back in my day) to everyone studying for the CISSP exam. The concept is easy enough: estimate the annual rate of occurrence (ARO) of an event and multiply it by the single loss expectancy (SLE) of an event. The output of that is the annualize loss expectancy or ALE. Typically people are instructed to use single point estimations and if this method has ever been used in practice it’s generally used with worst-case numbers or perhaps an average. Either way, you end up with a single number that, while precise, will most likely not line up with reality no matter how much effort you put into your estimations.

Enter the next generation of ALE

ALE 2.0 leverages the BETA distribution with PERT estimates and runs a Monte Carlo simulation. While that sounds really fancy and perhaps a bit daunting, it’s really quite simple and most people should be able to understand the logic by digging into the ALE 2.0 example.

Let’s walk through ALE 2.0 by going through a case together: let’s estimate the annual cost of handling virus infections in some made-up mid-sized company.

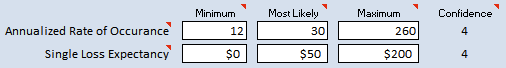

For the annual rate of occurrence, we think we get around 2 virus infections a month on average, some months there aren’t any, and every few years we get an outbreak infecting a few hundred. Let’s put that in terms of a PERT estimate. At a minimum (in a good year), we’d expect maybe 12 a year, most likely there are 30 per year and bad years we could see 260 outbreaks.

For the single loss expectancy, we may see nothing, the anti-virus picks it up and cleans it automatically and there’s no loss. Most likely, we spend 30 minutes to manually thump it, worst case we do system rebuilds, taking 2 hours, non-dedicated time but there are some other overhead tasks. Putting that in terms of money, we may say minimum is $0, most likely, oh $50 of time, worst case, $200.

Now let’s hit the button. Some magic happens and out pops the output:

There were a couple of simulations with no loss (min value) from responding to viruses given those inputs, on average there was about $4,400 in annualized loss and there were some really bad years (in this case) going up to around $35k. There are some statements to make from this as well, like "10% of the ALE simulations exceeded $9,000.”

But the numbers, or even ALE for that matter aren’t what this is about, it’s about understanding what OpenPERT can do.

What’s going on here?

Swap over to the “Supporting Data” tab in Excel, that’s where the magic happens. Starting in cell A2, we call the OPERT() function with the values entered in for ARO. The OPERT function takes in the minimum, most likely, maximum values and an optional confidence value. In the cell, the function returns a random value based on a beta distribution of values. This ARO calculation is repeated for 5000 rows in column A, that’s the Monte Carlo portion. Column B has all of the SLE calculations (OPERT function calls in SLE estimations) for 5000 simulations and column C is just the ARO multiplied by the SLE (the ALE for the simulation).

In summary, this leverages the OPERT() function to returns a single instance for the two input estimations (ARO and SLE) and we repeat that to get a large enough sample size (and 5000 is generally a large enough sample size, especially for this type of broad-stroke ALE technique).

Also, if you’re curious the table to the right of column C is the data used to construct that pretty graph on the first tab (show above).

Next Steps

The ALE method itself may have limited application, but it’s the thought process behind it that’s important. The combination of PERT estimations with the beta distribution to feed into Monte Carlo simulations is what makes this approach better than point estimates. This could be used for a multitude of applications, say for estimating the piano tuners in Chicago or any number of broad estimations, some of them even related to risk analysis hopefully. We’re already working on the next version of OpenPERT too, in which we’re going to integrate Monte Carlo simulations and a few other features.

It would be great to build out some more examples. Can you think of more ways to leverage OpenPERT? Having problems getting it work? Let us know, please!

Statistical Literacy

If I could choose one subject to force everyone to become literate in, it’d be statistics, specifically around probability and randomness. Notice I didn’t say that people should learn statistics. People have done that. They memorized facts and formulas long enough to pass a course, but most people are not literate in it. They continually fall prey to basic errors any  good professor warns students of. So I thought I’d toss out a couple of things to get your juices flowing around statistical literacy.

good professor warns students of. So I thought I’d toss out a couple of things to get your juices flowing around statistical literacy.

The first entry in this is a quick TED talk that lasts 3 minutes from Arthur Benjamin on a formula for changing math education. I’m linking his talk here just to wet the pallet.

The next is a very old book in technical terms but still very applicable, It’s titled “How to Lie With Statistics” by Darrell Huff. It’s a relatively short book, and easily consumable. Coming in at under $10, it’s something that everyone should have on their bookshelf. I was amazed at how much advertising and sales tactics still leverage the techniques listed in this book. It is an excellent step towards statistical literacy.

Next was the impetus for this post, I found a link to the Statistics Policy Archive over at the Parliament’s website. For example the 7 page guide on Uncertainty and Risk has this little nugget about estimation, “At the very least a range acknowledges there is some uncertainty associated with the quantity in question.” I’d like some coworkers to understand that statement. Doing things like reducing resources for a volatile project to a single number drives me stinkin’ nuts. Keep in mind that these appear to be written towards policy makers in the parliament who need a primer on statistics. I’m still making may way through these docs.

Last point of reference is one of the best books I’ve ever read, “The Drunkard’s Walk: How Randomness Rules Our Lives” by Leonard Mladinow. Not only does he have a light and easy writing style, he defines probability by describing the lack of probability: randomness. Aside from being an excellent geek-out topic, randomness and probability are part of every daily task and decision we make. This book takes the reader two steps back and then three steps forward.

I bring all of this up because probability, randomness and statistics are so integrated into daily life, and are especially prevalent in I.T. and security. As we talk about security controls, risks and improving the world we’re talking probability. When we architect a solution, code up an application, audit a system we are surrounded by probabilities. Becoming literate in statistics and probabilities is something I’d like to see more of because I think that would improve every aspect of our profession more than anything else at this point.

My Complex Hospital Stay

I spent some time last week in the hospital having a new foot built for me. I don’t want to  dwell too much on the details, but while I was in the hospital, in that drugged-induced stupor, I was thinking about “How Complex Systems Fail” by Richard Cook, MD. After all he wrote that about the complex healthcare system, not Information Systems and I was hoping to get some pearl or enlightenment while laying there. It’s been years since he wrote it and I was just hoping to see some little nugget that I could take back to my day job. However, what I found wasn’t illuminating at all, just more reinforcement of why that paper should be mandatory and memorized by all infosec people (and healthcare people).

dwell too much on the details, but while I was in the hospital, in that drugged-induced stupor, I was thinking about “How Complex Systems Fail” by Richard Cook, MD. After all he wrote that about the complex healthcare system, not Information Systems and I was hoping to get some pearl or enlightenment while laying there. It’s been years since he wrote it and I was just hoping to see some little nugget that I could take back to my day job. However, what I found wasn’t illuminating at all, just more reinforcement of why that paper should be mandatory and memorized by all infosec people (and healthcare people).

I’m going to start off with my summary what I observed about healthcare that I think applies nicely over to infosec.

Focus on the Basics**

Citing from Cook’s paper, here’s the statement that pretty much summed up my stay:

Overt catastrophic failure occurs when small, apparently innocuous failures join to create opportunity for a systemic accident.

I had no catastrophic failure while staying in the hospital, but I had an endless supply of small failures and luckily no systemic accident. Anyway, I love how that’s worded and I feel that may be addressed by focusing on the basics. Any one part of a complex system may not be complex by itself, but become complex when they mix or intertwine with other parts of the complex system. I think my care at the hospital (and information assets at work) could be better taken care of if we simply focus on the basics and work on doing them better. I’m going to walk through two scenarios to help illustrate the point.

Scenario 1: Apply Ice

During my stay, advice from different “expert” practitioners would contradict others advice on moderately unimportant topics. Things like how my boot is adjusted, benefits of elevation or even the purpose behind this or that drug. I was able to easily understand this, I often find disagreement in advice from “experts” in our field. But the separation between advice and practice is where I saw some interesting breakdown.

Nobody would say that icing wasn’t helpful. It’s well understood that icing reduces swelling and would speed my recovery. But it’s the details that diverge and how far away reality was that really surprised me. One advice-giver was specific enough to say 15 minutes of every hour should be spent icing. However, during my 2.5 days in the hospital I received 2 ice packs and both times I found it still under my leg with the ice melted hours afterwards. Both times I had to pry the ice packs out from my leg and dump it onto the floor.

So how helpful is icing? Like I said nobody would say it wasn’t helpful. But according to their actions, it was less helpful than checking vitals and only slightly less helpful than emptying the bed pan. Right? We’ve got an imbalance in healthcare – a misperception on the cost vs. reward of certain activities. We also have an imbalance in infosec. We’ve got a misperception on the costs/rewards of certain technologies and are not doing things like checking logs as often as we should. Like I said, let’s focus on the basics.

Scenario 2: Vital Signs

When I first arrived for surgery, they attached a blood pressure cuff on my arm and an oxygen monitor on my finger. I was told these would stick with me and it was decided to attach these to my left arm after talking about my right-handedness. During my stay, folks would come in at some interval (2-4 hours) and “check my vitals”. At one point during the first night, when I was barely sensing reality, I woke up and realized that I was sleeping with two blood pressure cuffs and two oxygen monitors, one set on my left side and one on my right. Once I pointed this out to the vital-checker, they removed both. No, I don’t know why. This is was Cook refers to as applying an “end-of-the-chain measure.”

How does this apply to infosec? We need to be aware of misattribution of mistakes. It’s easy to look at this and say that some nurse or assistant screwed up, and they should be scolded or publicly mocked or something. But in reality we need to understand that this was a symptom. We have to back up and truly figure out root cause, not enough time to communicate? Is the culture anti-organizational-skills? I’d like to say that I’ve never seen this in infosec, but I’d be lying. We’ve all seen security controls installed/mandated that are redundant or worse, harmful (*cough* transparent encryption *cough*).

All in all, we have the tools and we have the ability. I’d like to make a call out for avoiding catastrophic suckiness by simply focusing on the basics. We need to focus on doing what we know how to do and we need to apply it coherently. Pretty simple request I’d say.

**This post and summary is dedicated to my crappy and non-catastrophic stay at Regions Hospital, Saint Paul, MN.

Things that Cannot be Modeled, Should be Modeled Over and Over

I read a post titled “Things that Cannot be Modelled Should not be Modelled” [sic], and to say I’m irritated is an understatement. The post itself is riddled with inconsistencies and leaps of logic, but there is an underlying concept that I struggle with, this notion of “why try?” This concept that we’ve somehow failed, so pack up and head home. I cannot understand this mentality. If there is one thing that history teaches over and over and over, it’s that humans are capable of amazing and astonishing feats and progress, many of which appeared to go against the prevailing concepts of logic or nature at the time.

against the prevailing concepts of logic or nature at the time.

Precisely Predicting versus Playing the odds

Nobody believes that modeling risk is an attempt to predict an exact future, it’s about probability and laying odds. As Peter L. Bernstein points out in “Against the Gods”, way back in history, the thought of laying odds to rolling dice was thought to be ridiculous. Of course the Gods controlled our fate, so of course, attempting to forecast the throw of dice (quantify risk) was absurd. I’m sure the first few folks who understood odds profited nicely. The concept of probability itself is relatively young in our history and attempting to quantify and model risk is even younger. Of course we’re going to make mistakes. And history shows us that people throughout time have considered these mistakes and acts of progress an affront to the laws of “nature”. I read that post by Jacek Marczyk in that light.

Fact is, every crazy pursuit by humankind has had critics and for good reason. Just focusing on the field of medicine can yield thousands of insanely beneficial and insanely insane acts of science. Books like “Elephants on Acid” show us that people do a lot of weird things and every once in a while, truly amazing things occur. We have the right to question, it’s our duty to challenge, but it is never an option to say don’t try. The whole point is we don’t give up. We plow forward and once we’ve reached a dead-end, we take a step back and try to move forward in another direction.

But back to the article. The articles lists things like “human nature” and “feelings” as “impossible to model”. I’m sure fields like Behavioral Economics and most every part of psychology would argue against that. Just reading through books like “Predictably Irrational” or “How We Know What isn’t So” we can see that human nature and even human feelings have been modeled and benefit has been found in that modeling. How prices get set and advertising campaigns are shaped have both benefitted from attempts in modeling human behavior and feelings. I’d also like to point out that the road to modeling those things is probably filled with a few set backs and failures, but that doesn’t make it not worth the effort.

Decisions, Decisions

Enough predication, let me get to my point. Being able to quantify or model risk is not a prize unto itself. The purpose of studying, researching and modeling risk is not to simply reach a pinnacle of holy-riskness. The pursuit of risk exists for one simple reason: to support the decision process. Let’s be honest here, those decisions are being made whether or not formal analysis is performed. The true benefit of risk management is to make better, more informed (and hopefully profitable) decisions. Saying that we should not attempt to model or quantify risk is like saying we should not try to make better decisions.

Going back to a flight example, when the Wright brothers made their first flight, did folks say “that flight didn’t make from New York to Chicago, so shut this whole flight concept down.” Perhaps they did, but those people would now be labeled naysayers, simpletons, perhaps even idiots.

On models

One of the first things taught to furniture makers is to build a model first. Building models allows them to play out different ideas and see problems before they build. It allows them to  try out different decisions before they actually make a decision. Even experienced designers wouldn’t fathom squaring up their first piece of wood without a model to educate themselves on the world they are about to construct. Hundreds of years of furniture makers have learned that the cost of not modeling outweighs the investment to model an idea. Models exists to support decisions, they attempt to answer questions around “what if.”

try out different decisions before they actually make a decision. Even experienced designers wouldn’t fathom squaring up their first piece of wood without a model to educate themselves on the world they are about to construct. Hundreds of years of furniture makers have learned that the cost of not modeling outweighs the investment to model an idea. Models exists to support decisions, they attempt to answer questions around “what if.”

Without models of weather, we wouldn’t have any indication about storms or severe whether short of looking at the sky. We would not be able to accurately fly planes, nor navigate them accurately without models. We cannot argue that attempting to model our world to support the decision process is a waste of time. History has taught us that the only true failure is not trying in the first place.

To say that we shouldn’t try something based on some perception of the “laws of nature” or pure semantics is insulting. Now if someone wants to argue that our current set of risk models are broken, then bring that on. Step right up and let’s discuss those findings, let’s talk about better decisions and let’s alter the models. Perhaps we even toss our current models aside and start from scratch, but saying that we shouldn’t model at all is self-defeating and creates nothing but noise distracting from the real work. Not attempting to improve our decision process is not an option, attempting new approaches or identifying alterations is not only an option, it should be expected.